The National Basketball Association (NBA) is a professional basketball league in the United States. There are $30$ teams in the league, divided evenly into $2$ conferences: the Eastern Conference and the Western Conference.

In the regular season, each team plays $82$ games. NBA regular season standings are determined by teams' win-loss records within their conferences.

The top $8$ teams from each conference advance to the playoffs. In the event of a tie in the standings, there is a tie-breaking procedure used to determine playoff seeding.

Starting in the $2019\text{-}20$ season, the NBA added a play-in tournament to give the $9^\text{th}$ and $10^\text{th}$ place teams in each conference the opportunity to earn a spot in the playoffs. It works as follows:

- The $7^\text{th}$ and $8^\text{th}$ place teams play a game to determine the $7^\text{th}$ seed. The winner advances to the playoffs.

- The $9^\text{th}$ and $10^\text{th}$ place teams play an elimination game. The loser is eliminated.

- The loser of the $7/8$ game and the winner of the $9/10$ game play an elimination game to determine the $8^\text{th}$ seed. The winner advances to the playoffs; the loser is eliminated.

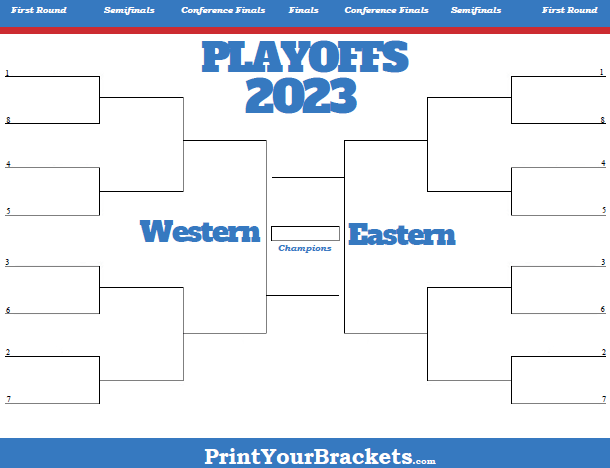

Once the final playoff seeding is determined, each team plays an opponent in a best-of-$7$ series. The first to win $4$ games advances to the next round. The first round is followed by the conference semifinals, then the conference finals, then the finals. The team that wins the NBA Finals is the NBA Champion.

The matchups for each round are determined using a traditional bracket structure, shown below: